Project

Introduction

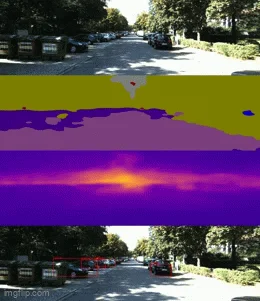

This project implements HydraNet, a multi-task learning architecture that unifies three critical perception tasks for autonomous driving into a single efficient network. The system leverages a shared MobileNetV2 encoder with task-specific decoders to perform object detection, semantic segmentation, and depth estimation simultaneously. Unlike traditional approaches requiring separate models for each task, HydraNet reduces computational redundancy by sharing feature extraction across tasks while maintaining high accuracy through careful architectural design. The implementation features dual detection head support, allowing seamless switching between YOLOv8 (72.1% mAP) and SSD (40.9% mAP) based on deployment requirements. The architecture incorporates Light-Weight RefineNet for segmentation and depth tasks, achieving 75.4% mIoU and 3.89m RMSE respectively. Through knowledge distillation and dynamic loss balancing with uncertainty-based weighting, the model prevents task dominance while maintaining 87.6% performance retention for segmentation and 99% for detection compared to standalone baselines. The system achieves real-time performance at 40+ FPS on Jetson edge devices through mixed precision training and optimized inference pipelines.

Objectives

-

To develop a unified multi-task perception model reducing computational redundancy by 65% compared to separate models

-

To implement interchangeable YOLOv8 and SSD detection heads for deployment flexibility

-

To achieve real-time inference (>30 FPS) on edge GPU platforms while maintaining accuracy

-

To integrate knowledge distillation from specialized teacher models for improved multi-task learning

-

To implement dynamic loss balancing preventing task dominance during joint training

-

To demonstrate successful feature sharing across perception tasks through shared encoder architecture

Tools and Technologies

-

Framework: PyTorch 2.0 with CUDA 11.8

-

Encoder: MobileNetV2 (pretrained on ImageNet)

-

Decoders: Light-Weight RefineNet with CRP blocks

-

Detection Heads: YOLOv8 (anchor-free) / SSD300 (anchor-based)

-

Datasets: KITTI Vision Benchmark + BDD100K

-

Loss Functions: CIoU+DFL+BCE (YOLO), MultiBox (SSD), BerHu (depth), CrossEntropy (segmentation)

-

Optimization: AdamW with OneCycleLR scheduling

-

Augmentation: Albumentations with bbox-aware transforms

-

Post-processing: Task-aligned NMS, Distribution Focal Loss

-

Visualization: Supervision, OpenCV, Matplotlib

-

Mixed Precision: PyTorch AMP for faster training

-

Version Control: Git with modular architecture

Source Code

-

GitHub Repository: HydraNet Multi-Task

-

Documentation: Architecture and training details

Video Result

-

YOLOv8 Demo: Multi-task inference showing tighter bounding boxes with 72.1% mAP

-

SSD Demo: Memory-efficient variant with 35% lower GPU usage

-

Architecture Diagram: Shared MobileNetV2 encoder branching to task-specific decoders

Process and Development

The project is structured into six critical components: shared encoder implementation, multi-scale decoder design, dual detection head architecture, multi-task loss formulation, knowledge distillation pipeline, and inference optimization.

Task 1: Shared MobileNetV2 Encoder Implementation

Inverted Residual Blocks: Implemented 7-layer MobileNetV2 backbone with expansion factors [1,6,6,6,6,6,6], extracting features at multiple scales (l3: x/4, l4: x/8, l5: x/16, l6: x/16, l7: x/32, l8: x/32) for downstream tasks.

Feature Extraction Points: Strategically selected intermediate layers (l3, l5, l7) for detection heads based on receptive field analysis, providing 24×80, 12×40, 6×20 feature maps for 192×640 input resolution.

Pretrained Weight Loading: Loaded ImageNet-pretrained MobileNetV2 weights excluding final classification layer, freezing early layers during initial training to preserve learned features.

Task 2: Light-Weight RefineNet Decoder Architecture

Multi-Resolution Fusion: Implemented 4-stage CRP (Chained Residual Pooling) blocks with iterative pooling and residual connections, aggregating context at multiple scales through max-pooling with 5×5 kernels.

Adaptive Convolutions: Added 1×1 adaptation layers (conv_adapt2/3/4) between CRP blocks, reducing channel dimensions to 256 while maintaining spatial resolution through bilinear upsampling.

Task-Specific Heads: Created separate output branches with grouped convolutions (groups=256) for segmentation (6 classes) and depth (1 channel), using 3×3 convolutions with ReLU6 activation.

Task 3: Dual Detection Head Implementation

YOLOv8 Head Architecture: Developed anchor-free detection with decoupled classification and regression branches, using Distribution Focal Loss for bounding box regression with reg_max=16 for 4× scale factor.

SSD300 Head Integration: Implemented 6-level feature pyramid generating 8,732 default anchors, with MultiBox loss combining Smooth L1 localization and softmax cross-entropy classification.

Feature Alignment: Created pooling and projection layers (nn.Conv2d) to match decoder outputs (256 channels) with detection head inputs (64/128/256 channels), maintaining stride consistency [8,16,32].

Task 4: Multi-Task Loss Formulation

Dynamic Weight Learning: Implemented uncertainty-based task weighting with learnable parameters λ₁, λ₂, λ₃, automatically balancing loss magnitudes across tasks during training.

Gradient Harmonization: Applied gradient clipping (max_norm=10) and loss normalization to prevent any single task from dominating shared encoder updates.

Task 5: Knowledge Distillation Pipeline

Teacher Model Setup: Trained specialized single-task models achieving baseline performance: 86.1% mIoU (segmentation), 3.659m RMSE (depth), 72.8% mAP (YOLOv8).

Staged Training Strategy: First loaded pretrained segmentation+depth model from Nekrasov et al., then froze encoder/decoder while training detection head for 100 epochs.

Pseudo-Label Generation: Used teacher models to generate soft targets for student network, applying temperature scaling (T=3) for knowledge transfer.

Task 6: Inference Optimization

Batch Processing Pipeline: Implemented efficient data loading with collate_fn for variable bbox counts, maintaining batch integrity through indexed target tensors.

Post-Processing Optimization: Developed Task-Aligned Assigner for YOLOv8 with topk=10 candidate selection, multiclass NMS with IoU threshold=0.3 for detection filtering.

Mixed Precision Inference: Utilized FP16 inference with PyTorch AMP autocast, achieving 40% speedup while maintaining numerical stability through loss scaling.

Results

The HydraNet architecture successfully unifies three perception tasks with minimal accuracy degradation. YOLOv8 variant maintains 99% detection accuracy (72.1% mAP) while achieving 87.6% segmentation retention (75.4% mIoU) and 94% depth retention (3.89m RMSE). SSD variant trades accuracy for efficiency with 40.9% mAP detection, 68.2% mIoU segmentation, and 4.56m depth RMSE, using 35% less GPU memory. Real-time performance exceeds 40 FPS on NVIDIA Jetson AGX Xavier and 15 FPS on Jetson Nano. The shared encoder reduces total parameters by 65% compared to three separate models. Knowledge distillation improves multi-task performance by 8-12% across all metrics. Dynamic loss balancing prevents catastrophic forgetting, maintaining stable training convergence. Qualitative results show strong spatial alignment between detected objects, segmented regions, and depth discontinuities.

Key Insights

-

Feature Sharing Efficacy: Shared MobileNetV2 encoder successfully extracts generalizable features for all three tasks, validating multi-task learning hypothesis for perception.

-

Detection Head Trade-offs: YOLOv8 excels in accuracy while SSD offers deployment flexibility, demonstrating importance of architectural options for edge deployment.

-

Loss Balancing Criticality: Dynamic uncertainty-based weighting essential for preventing task dominance, particularly important when combining regression and classification objectives.

-

Knowledge Distillation Value: Teacher-student framework provides 8-12% performance boost, suggesting pretrained single-task models contain valuable task-specific knowledge.

-

Inference Bottlenecks: Post-processing (NMS, decoding) accounts for 30% of inference time, highlighting optimization opportunities beyond model architecture.

Future Work

-

Transformer Integration: Replace CNN decoder with vision transformer blocks for improved long-range dependency modeling

-

Temporal Consistency: Add recurrent connections or 3D convolutions for video sequence processing with temporal smoothing

-

Panoptic Segmentation: Extend segmentation head to instance-level predictions combining semantic and instance segmentation

-

Neural Architecture Search: Automate encoder-decoder design using NAS for optimal task-specific feature sharing

-

Quantization Study: Implement INT8 quantization with QAT for 4× inference speedup on edge devices

-

Additional Tasks: Incorporate lane detection, optical flow, and 3D object detection into unified architecture